- LLMs like GPT-5 and Gemini are reshaping how businesses automate, communicate, and innovate.

- Fine-tuned AI and retrieval systems drive faster, more accurate decisions.

Large Language Models: How They’re Changing the Way We Work and Build

Published on: 12 February, 2026

Last updated on: 21 February, 2026

Back in late 2022, everything changed when large language models (LLMs) like ChatGPT became widely accessible.

At Mediusware, we felt excited but unsure. AI and LLMs were no longer just ideas—they were real and everywhere.

As a CTO, I often asked myself:

How will this change software development?

By 2023 and 2024, businesses started:

- Automating boring tasks

- Speeding up marketing

- Improving customer support with smarter chatbots

But with all the excitement came real challenges, ethical questions, tricky setups, and doubts about real value. As a trusted tech partner to many businesses, I often talk with CEOs and founders. It surprises me how many still don’t fully understand what LLMs are, how they work, or what they can really do.

This knowledge gap matters because misunderstanding LLMs can lead to missed chances, wasted budgets, or solutions that don’t scale. So, I’ve decided to share some insights from my experience.

In this article, I’ll explain LLMs clearly and explore what the future holds.

What Exactly Are LLMs

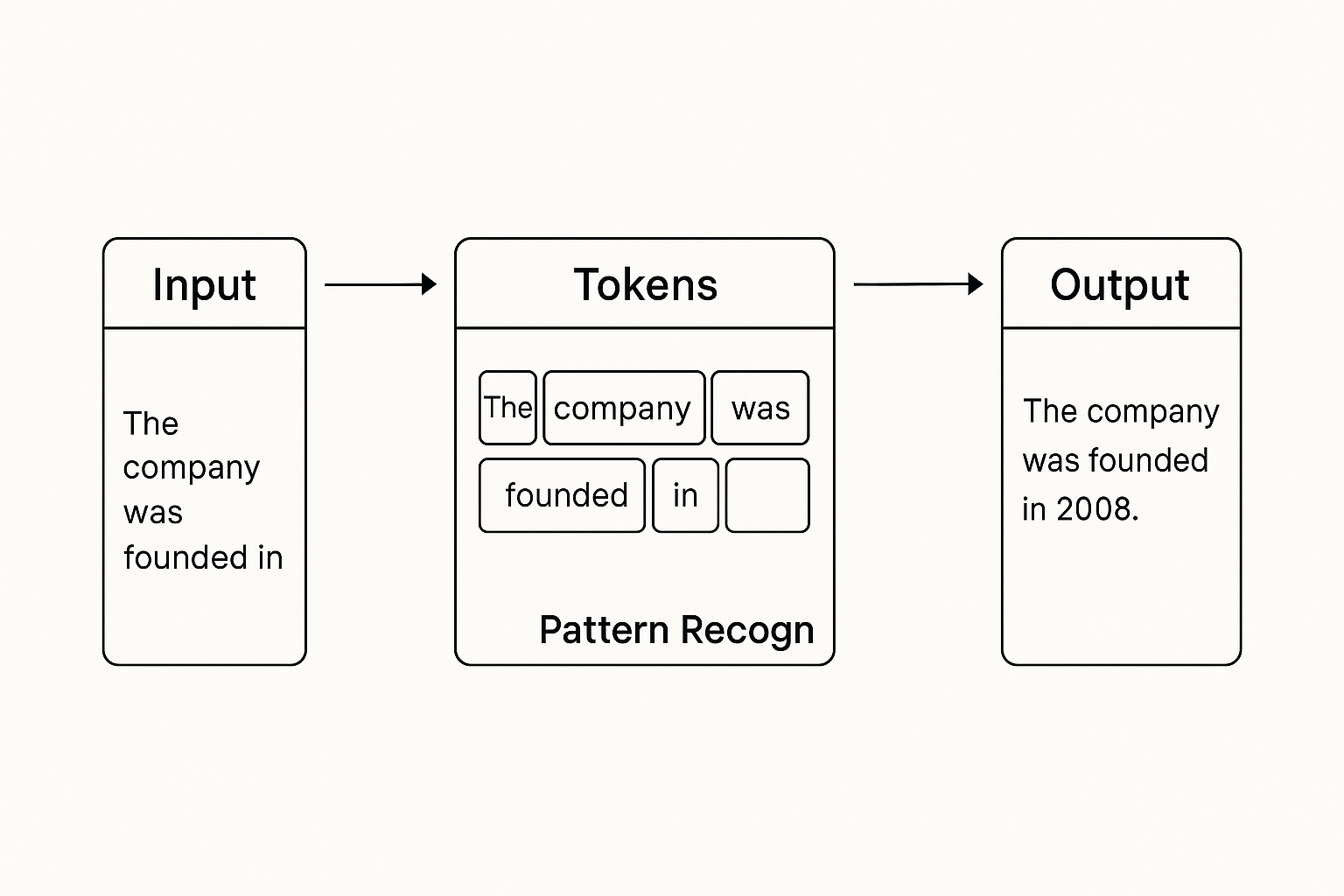

A Large Language Model can be imagined as a smarter version of predictive typing on your phone. You type a few words, and it guesses what comes next. But instead of relying on a dictionary, it learns from billions of examples of human language.

At its core, an LLM is trained on tiny pieces of text called tokens. It learns how words tend to appear together and which patterns sound most natural.

According to IBM, Large language models are AI systems capable of understanding and generating human language by processing vast amounts of text data. They work as giant statistical prediction machines that repeatedly predict the next word in a sequence.

When you ask it a question, it doesn’t look up an answer. Instead, it predicts what an intelligent response would look like, one word at a time. Traditional software follows clear rules, like “if this happens, then do that.”

LLMs work differently. It calculates what the most likely next word should be, based on everything you’ve written so far. That’s why they feel conversational. They don’t just process data; they understand flow and tone the way humans do.

Mini Case: Everyday Use

- Customer Support Chatbots

LLMs help chatbots answer questions quickly by using customer info, making support faster and better. - Document Summarization

LLMs can quickly sum up long documents like contracts or reports, saving time and effort. - Content Creation

LLMs create marketing content like social media posts and product descriptions, helping teams work faster.

How LLMs Actually Work

To understand how these models think, let’s simplify things. Every modern language model is built on something called a Transformer. It’s a neural network that learns to pay attention to the most important parts of your input text. In simple terms, attention means remembering what matters.

For example, if you ask ChatGPT, “My laptop won’t turn on. Should I take it to a repair shop, or can I fix it myself?” The model understands that “it” refers to your laptop. It figures this out by following the meaning in your sentence, not by memorizing facts, but by learning how words and ideas connect.

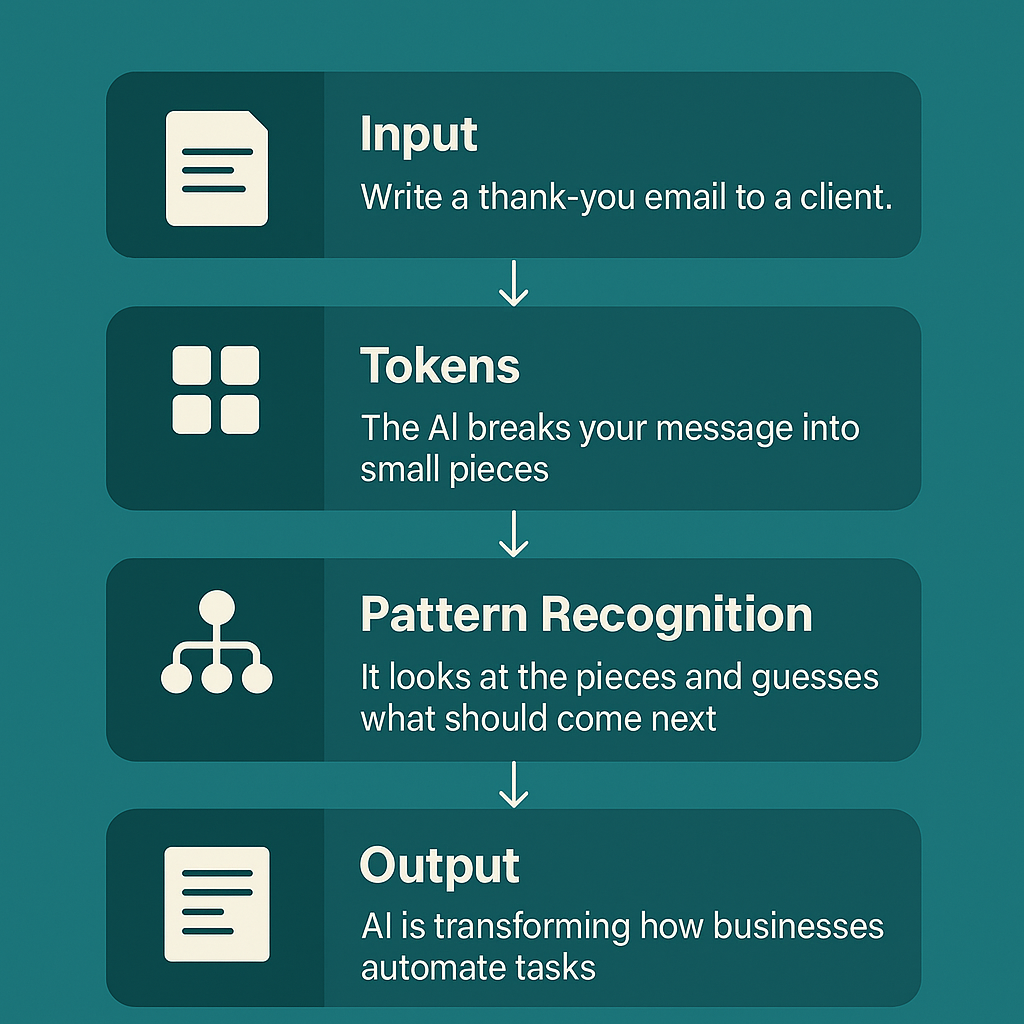

Step by Step: From Input to Output

- You type something, like “Write a thank-you email to a client.”

- The AI breaks your message into small pieces (tokens)

- It looks at the pieces and guesses what should come next.

- It keeps guessing one piece at a time until the full reply is ready.

Here’s another example:

If you start with “AI is transforming how businesses,” the model might continue with outputs like:

- automate tasks

- communicate faster

- and make smarter decisions.

Why don’t you try it yourself?

Ask any LLM app and see what it replies!

Why LLMs Sometimes Make Mistakes

Even the best models can be confidently wrong. This is what experts call a hallucination. A hallucination happens when an LLM produces an answer that sounds believable but isn’t true. It happens because the model doesn’t have a real understanding or live access to facts. It simply predicts what should come next based on patterns.

According to David Villalon-Pardo, a well-known AI researcher, Hallucination is a property of large language models that can't be fully eliminated. Despite advances, confident but false outputs remain a key challenge, especially in high-stakes usage.

Imagine asking, “Who won the 2025 Nobel Prize in Chemistry?” The model might invent a name because it has learned how those questions are usually answered, even if no such prize exists.

Bias is another issue.

If trained on skewed datasets, LLMs can reflect cultural or demographic biases, leading to problematic responses. Graphics (Overconfidence, presenting guesses as certainties further complicates things, particularly in high-stakes fields like healthcare or law.)

Best Solutions to Avoid Hallucinations in 2025

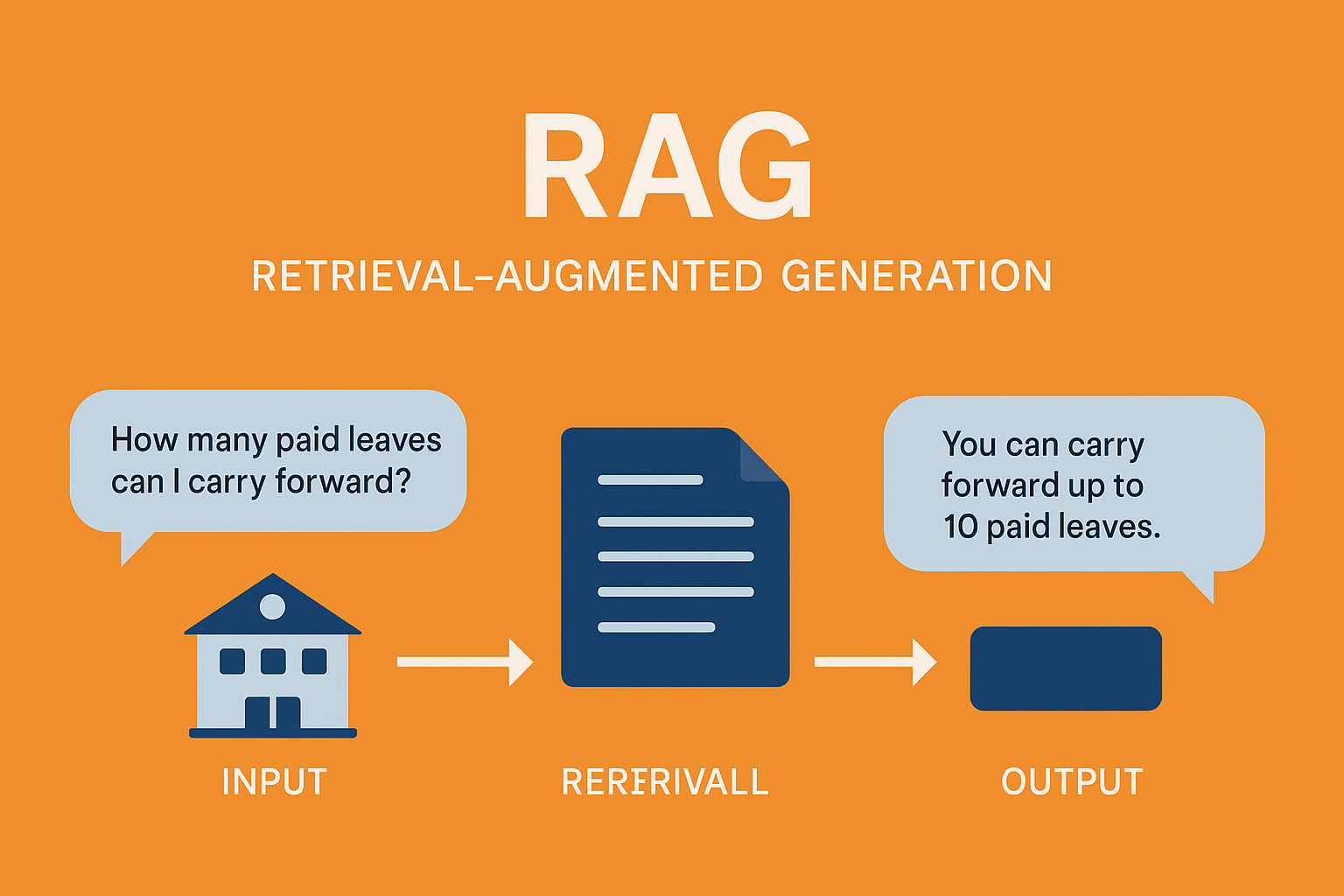

- Retrieval-Augmented Generation (RAG)

RAG helps AI give more accurate answers by pulling in real data before responding. For example, an HR chatbot can use RAG to check company policies when answering questions about leave.

- Reasoning Models

Newer models like Claude 4.5 and the O1 series use step-by-step reasoning (called chain-of-thought) to explain their answers, which improves accuracy.

- Self-Verification

Some models now check their own answers against trusted sources. This helps reduce made-up or incorrect information.

How Retrieval Improves Accuracy

A growing trend in 2025 is something called Retrieval-Augmented Generation, often shortened to RAG. It allows the model to look up relevant information before answering. For example, an HR assistant powered by RAG can search through company policies before replying to “How many paid leaves can I carry forward?”

Instead of guessing, it finds the exact line from the HR manual and uses that to craft its answer. That’s the difference between guessing and verifying. To reduce errors, give the model clear instructions, use verified data, and always review critical outputs.

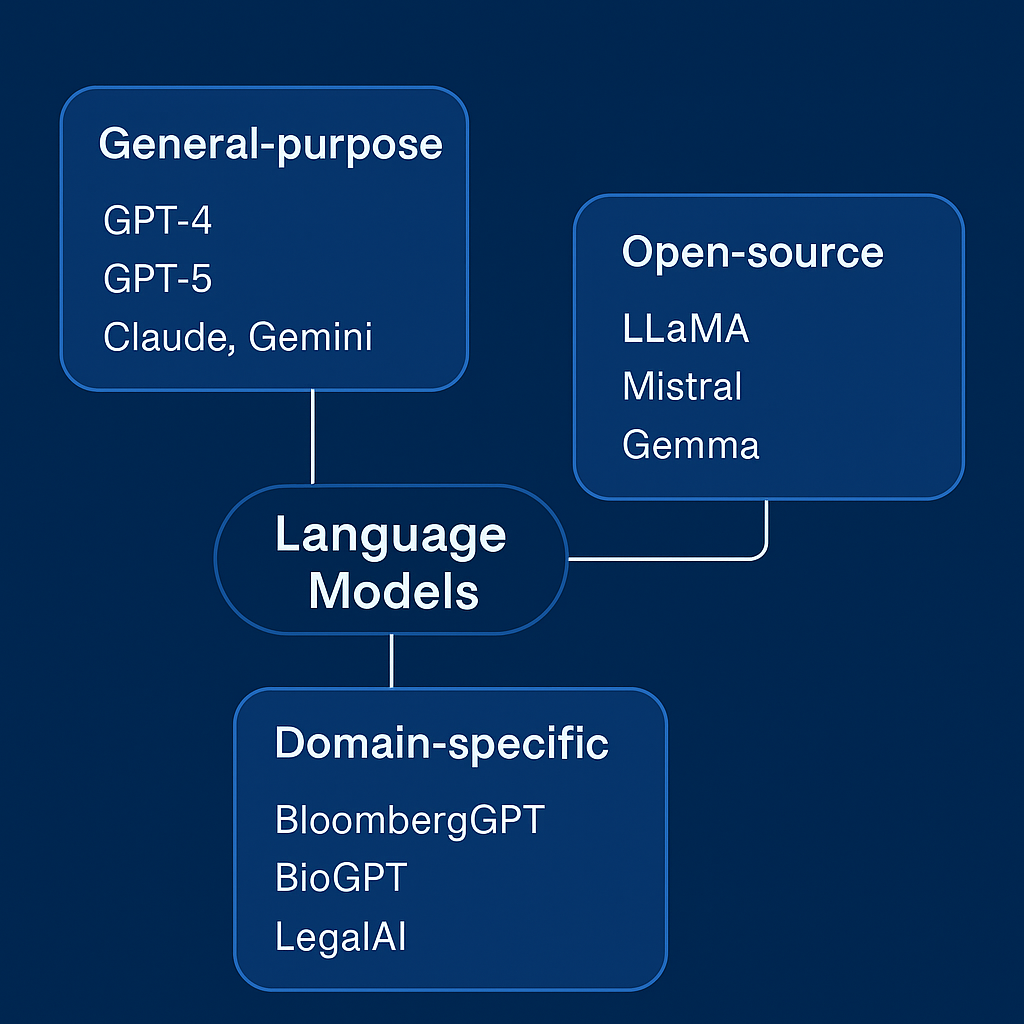

The Main Types of LLMs

Language models come in several varieties, depending on how they are built and what they are used for.

|

Type |

Examples |

Strengths |

Challenges |

Best Use |

|

General-purpose |

GPT-4, GPT-5, Claude, Gemini |

Versatile, creative, multilingual |

Costly, may hallucinate |

Writing, marketing, research |

|

Open-source |

LLaMA, Mistral, Gemma |

Customizable, private, affordable |

Requires technical setup |

Regulated industries, data-sensitive work |

|

Domain-specific |

BloombergGPT, BioGPT, LegalAI |

Deep knowledge in one field |

Limited outside domain |

Finance, healthcare, legal work |

Example use cases:

- A marketing team might use GPT-5 to create campaigns.

- A fintech company could host its own Gemma model to protect customer data.

- A hospital might rely on BioGPT to summarize patient reports accurately.

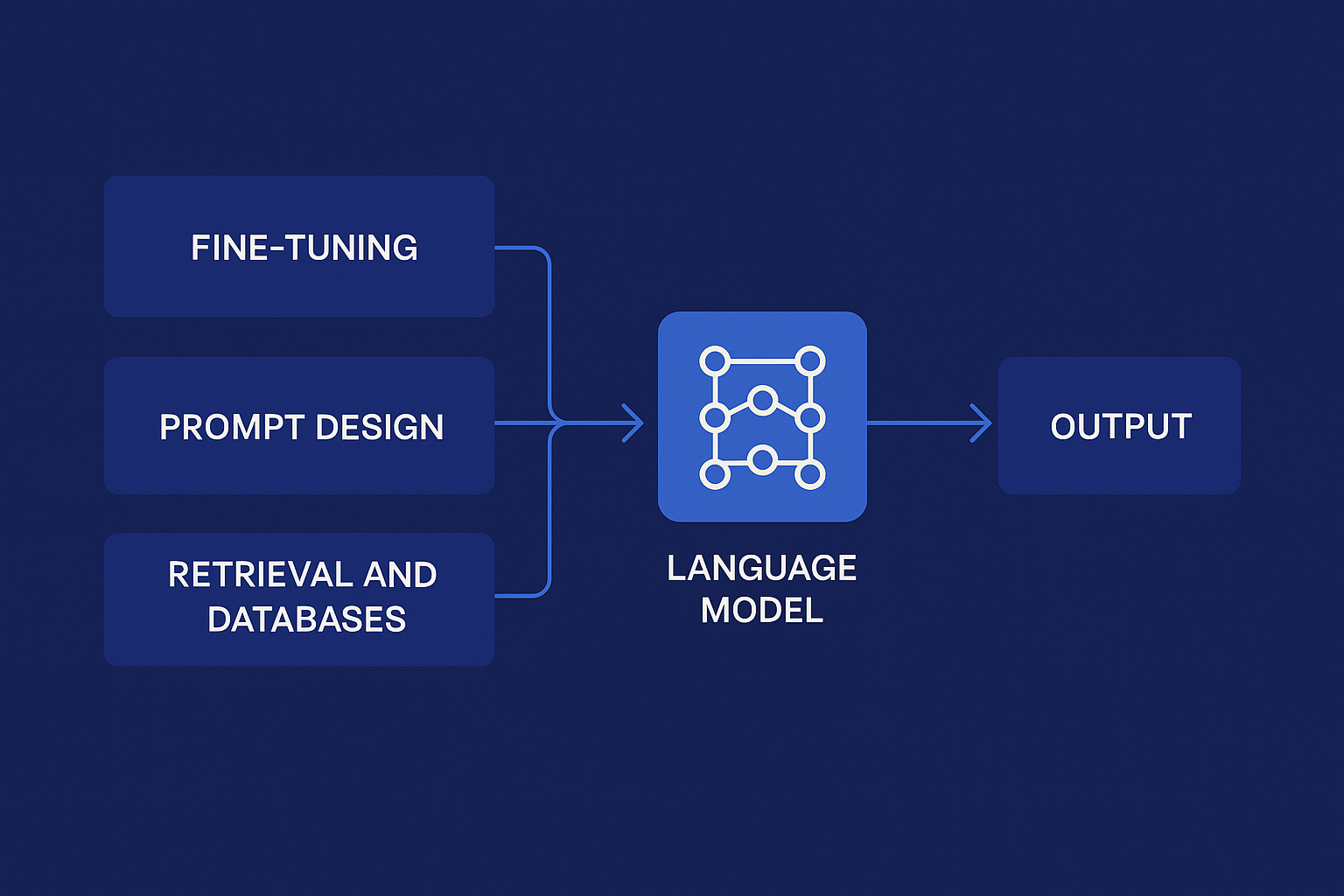

How Businesses Customize LLMs

Buying access to an LLM is only the beginning. The real value comes from customization. According to Forbes, around 37% of enterprises spend over $250,000 annually on LLMs, and 73% spend more than $50,000 yearly. Furthermore, 72% of organizations plan to increase their AI spending in 2025, showing strong financial commitment.

Fine-tuning the model

Fine-tuning means teaching the AI with your company’s own data. This could include your tone of voice, internal knowledge, customer queries, or product details. At Mediusware, we’ve seen how fine-tuning helps businesses make their AI assistants more accurate, relevant, and consistent with brand values.

Prompt design

Prompt design is about giving clear and specific instructions. The difference between a vague and a well-structured prompt can completely change the outcome. Instead of saying, “Summarize this report,” say, “Summarize this report for senior management in three bullet points with key numbers.”

Better prompts lead to better results. For example, Mediusware's business development and marketing team uses a variety of prompts to save time and automate frequent tasks.

Small upgrades instead of full retraining

There are lightweight ways to customize a model without rebuilding it. Techniques like LoRA allow developers to adjust parts of the model instead of retraining everything from scratch. It’s like upgrading a feature instead of building a whole new system.

Adding retrieval and databases

Many companies now combine their AI with internal document libraries. They use vector databases to store information in a searchable way, so the AI can pull real facts before answering. For example, a hospital chatbot might retrieve the latest treatment protocol before advising a doctor.

Real Business Applications

Customer service

Support teams use LLMs to answer repetitive questions and summarize tickets. Some companies have cut response times by more than half while maintaining accuracy and empathy.

Knowledge management

Law firms, HR departments, and IT teams use LLMs to organize information and answer complex queries. These systems can read thousands of pages in seconds and summarize the key points clearly. According to Softwebsolutions, Royal Bank of Canada (RBC) uses an LLM-based knowledge management system named Arcane. This system utilizes Retrieval-Augmented Generation to help employees quickly find and summarize information from internal policies via a chatbot interface, improving efficiency and compliance in critical business tasks.

Internal productivity

- Developers generate and fix code faster.

- Marketers draft campaigns in minutes.

- Executives summarize reports and meeting notes instantly.

Compliance and Legal

LLMs trained on contracts and policies help spot risky language, check for compliance, and speed up audits. For example, a finance company uses an AI tool to review contracts. It flags missing privacy terms and suggests changes, saving time and avoiding mistakes.

Common Challenges to Consider

Inaccuracy and bias

Language models sometimes make up facts or reflect bias from their training data. Regular testing and human review remain essential.

Data quality

If the training data is messy or outdated, the model will inherit those flaws. Good results come from clean, reliable data.

Cost and infrastructure

Running large models requires high computing power. Many companies start with API-based tools and scale gradually to private or open-source options.

Privacy and regulation

Rules such as GDPR, the EU AI Act, and HIPAA now set strict standards for transparency and data handling. Enterprises should build clear policies around AI use.

Measuring results

Track measurable outcomes like time saved, quality improvements, and cost reduction. Treat AI like any other strategic investment that must prove its value.

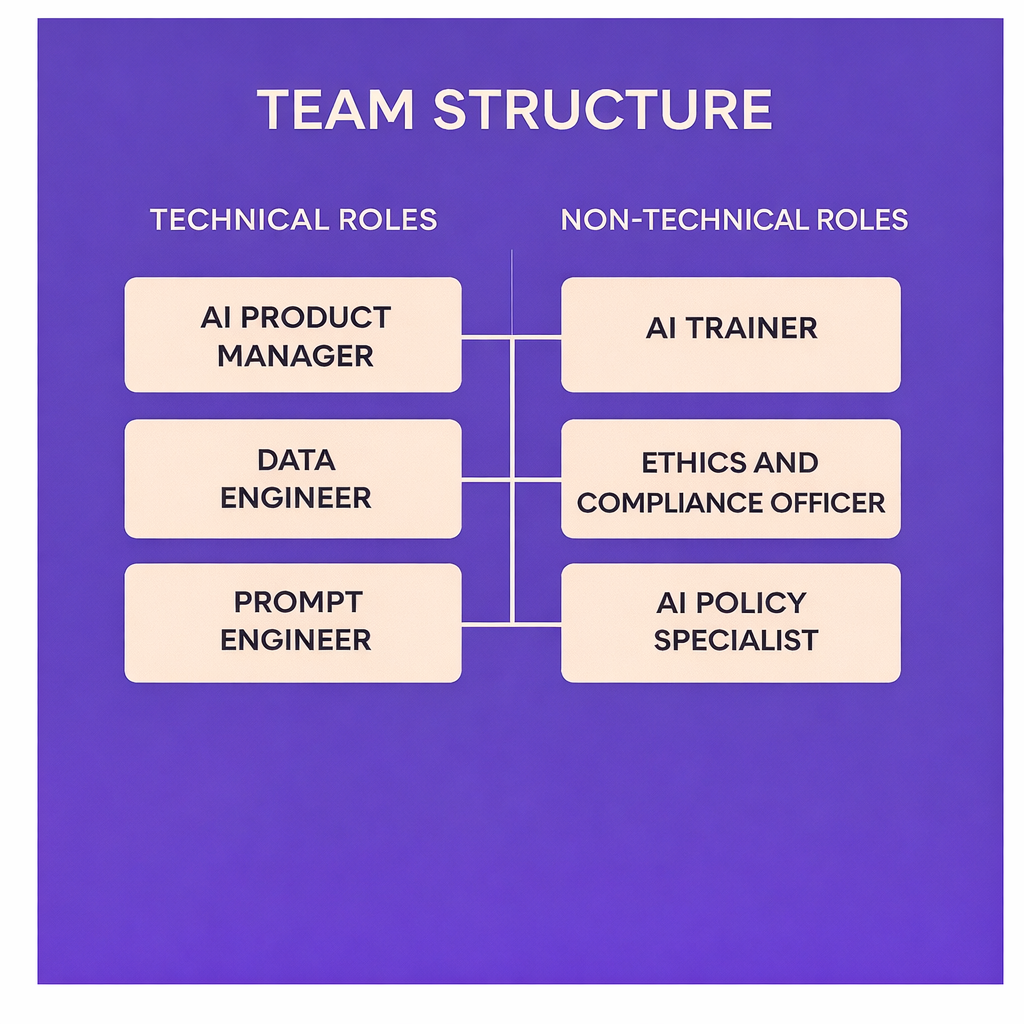

Building the Right Team

Wondering how an AI company works? Well, success with AI depends on people as much as technology. Here are some core roles every AI company (or AI department) has.

Core roles

- AI product manager who aligns business goals with model capabilities

- Data engineer who manages data pipelines

- Prompt engineer who crafts effective instructions

- AI trainer who evaluates and improves model output

- An ethics and compliance officer who ensures responsible use

AI literacy for everyone

According to research by MIT and NBER, AI employee training enables workers to automate repetitive tasks, speeding up workflows by 5% to 40%, unlocking higher-value work and better decision-making. Even non-technical employees should know how to interact with AI responsibly. Simple workshops on prompt writing, validation, and data awareness can transform adoption across departments.

Team structures

- Smaller firms can use external APIs and lightweight tools.

- Mid-sized companies may invest in custom setups with retrieval.

- Larger organizations often build full AI e-cosystems with monitoring and governance layers.

What Comes Next

-

AI That Understands More Than Just Text (Multimodal Intelligence)

New AI tools like GPT-5, Gemini 2.5, and LLaMA 4 can understand text, images, audio, and video all at once.

For example, you can upload a presentation, and the AI can rewrite or summarize it in seconds.

-

AI Agents That Work on Their Own

AI is now becoming more like a smart assistant that can plan, decide, and take action.

For example, you can ask an AI agent to research a topic, write a report, send emails, and even follow up, without giving every single instruction.

-

Smaller AI You Can Run Anywhere (Local Deployment)

New compact AI models run on your own computer or company server, no internet needed.

For example, a hospital can run AI tools in-house to keep patient data private and save money on cloud services.

-

AI That Explains Itself (Ethical Transparency)

Companies are building tools that show how and why an AI gave a certain answer.

For example, when an AI flags a job application, a dashboard can show which skills were missing, helping avoid bias and build trust.

-

Smarter, Safer AI for Sensitive Work (Better Reasoning)

New methods help AIs understand cause and effect, and learn from data without sharing private info.

For example, hospitals in different cities can train the same AI model on patient care without sharing patient records.

The Road Ahead

As a CTO at Mediusware, I’ve seen how AI has moved from a new idea to a real help in business. At first, we weren’t sure how to use it best, but over time, we learned that success comes from using AI carefully and training our teams well.

AI is not here to replace us but to help us work smarter and faster. The future is bright because AI keeps improving, it’s becoming safer, clearer, and more helpful. By working with AI, we can focus on what people do best: create, solve problems, and lead.

I, Rashedul Islam, firmly believe that the next generation of businesses will not compete only on technology. They will compete on how intelligently they use it.