Contributing high-quality datasets to Kaggle can open doors to exciting opportunities with top global companies like Google, Microsoft, Amazon, Meta, and IBM, as well as leading Bangladeshi organizations such as Grameenphone, bKash, and Pathao. Additionally, participating in Kaggle competitions hosted by organizations like Google Research, NASA, Intel, and Facebook AI can offer significant rewards, recognition, and networking opportunities. Beyond employment prospects, these contributions help you build a professional portfolio, gain visibility in the data science community, collaborate globally, and even work toward the prestigious title of Kaggle Grandmaster.

-

Learn how to build impactful datasets for Kaggle and data science projects.

-

Understand the key steps in dataset creation, from problem definition to data collection.

-

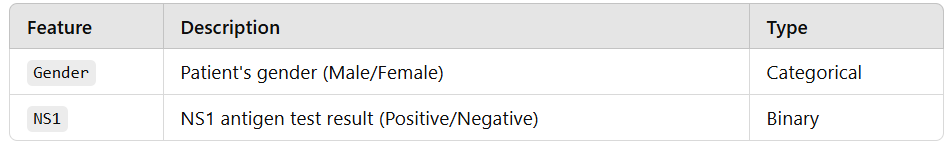

Discover best practices for cleaning, labeling, and structuring data to ensure usability.

-

Gain insights from the author’s journey creating the Dengue Dataset, contributing to public health research.