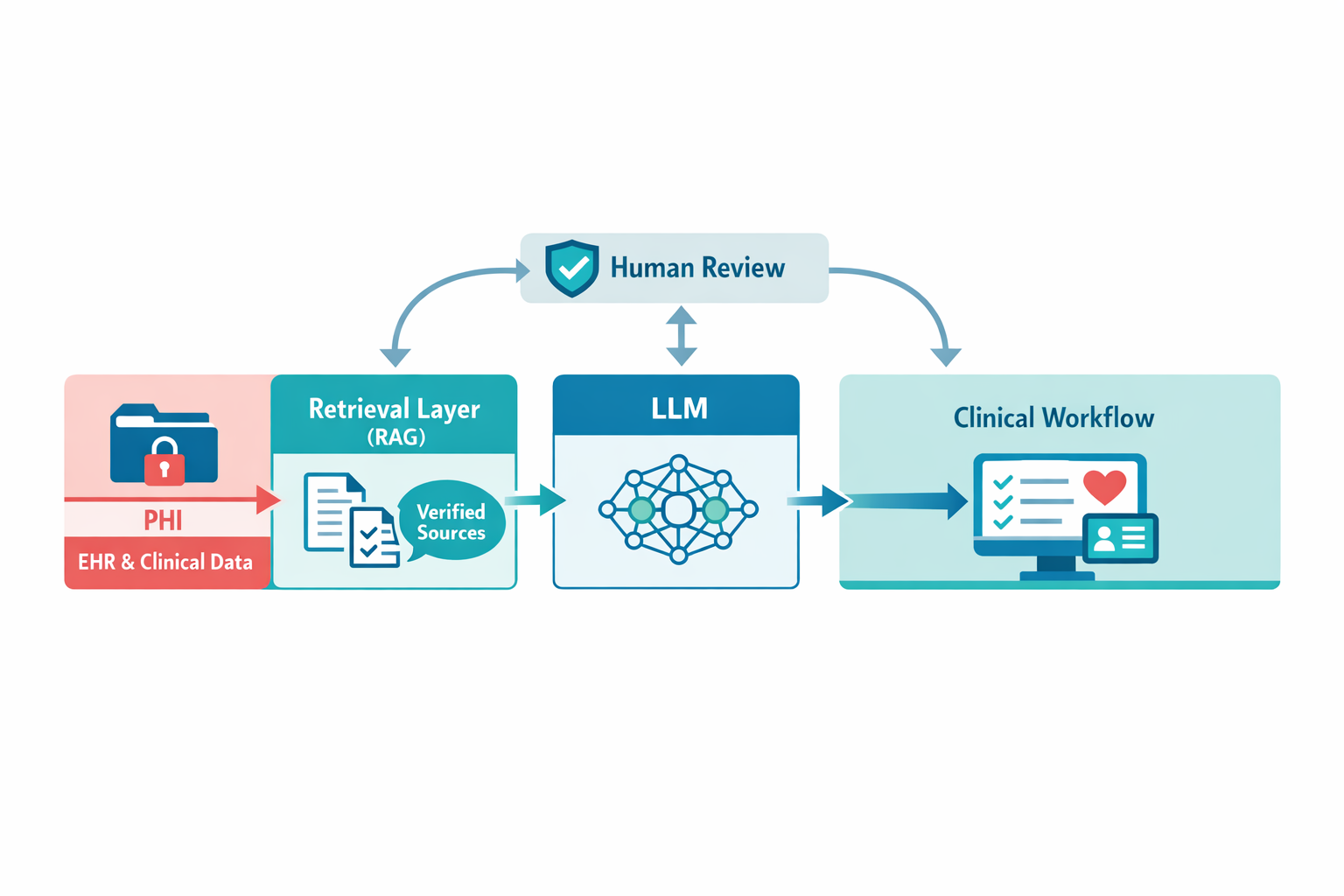

Yes, when strict data boundaries, access controls, and human review are in place. Inference-only designs reduce exposure significantly.

- LLMs and RAG improve healthcare workflows when grounded in trusted data and guided by human oversight.

- Safe deployment focuses on clear data boundaries, explainable retrieval, and predictable clinical support.