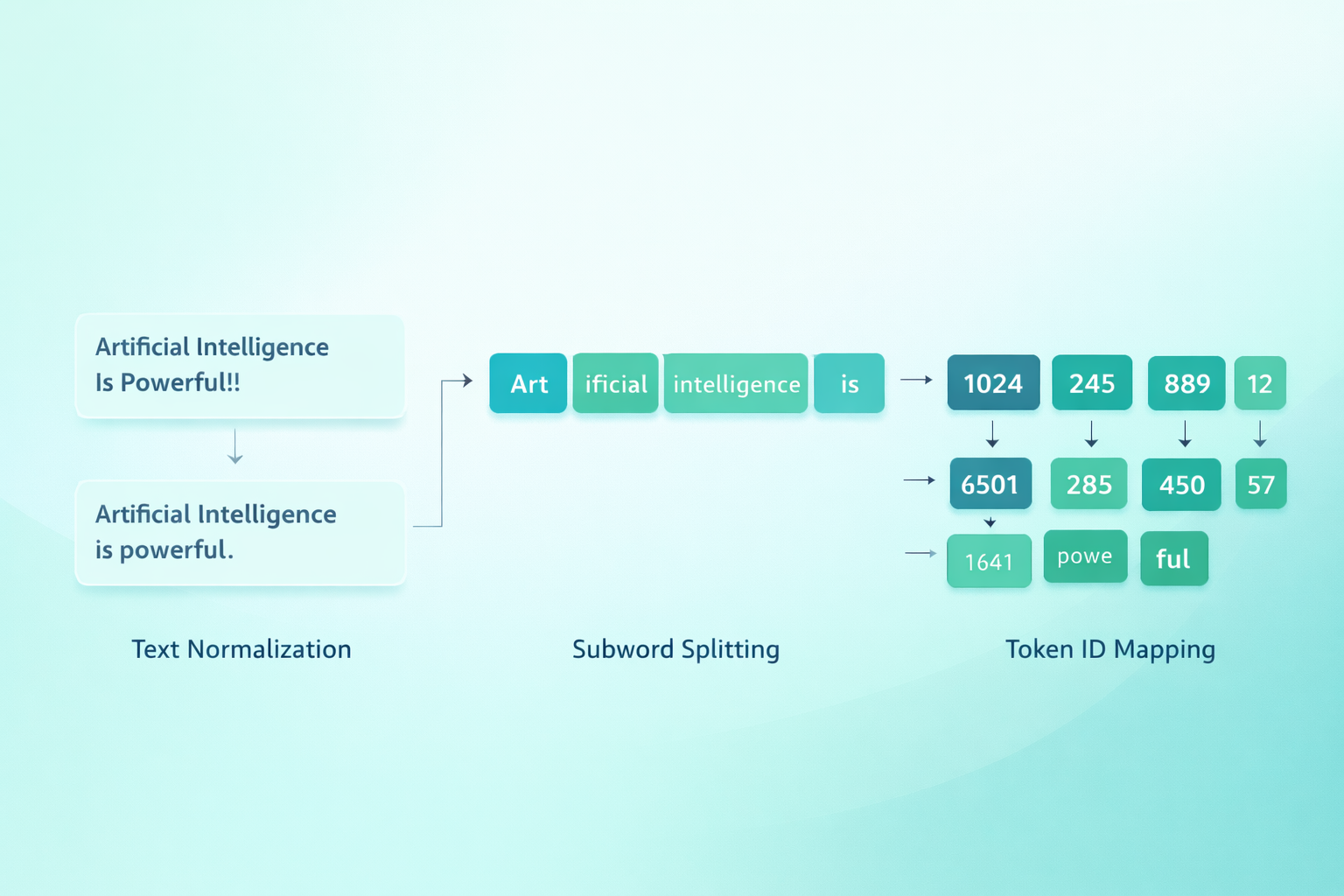

A token is the smallest unit a language model processes. It may be a whole word, part of a word, or even punctuation. For example, “ChatGPT” might split into ["Chat", "GPT"]. Models generate predictions one token at a time.

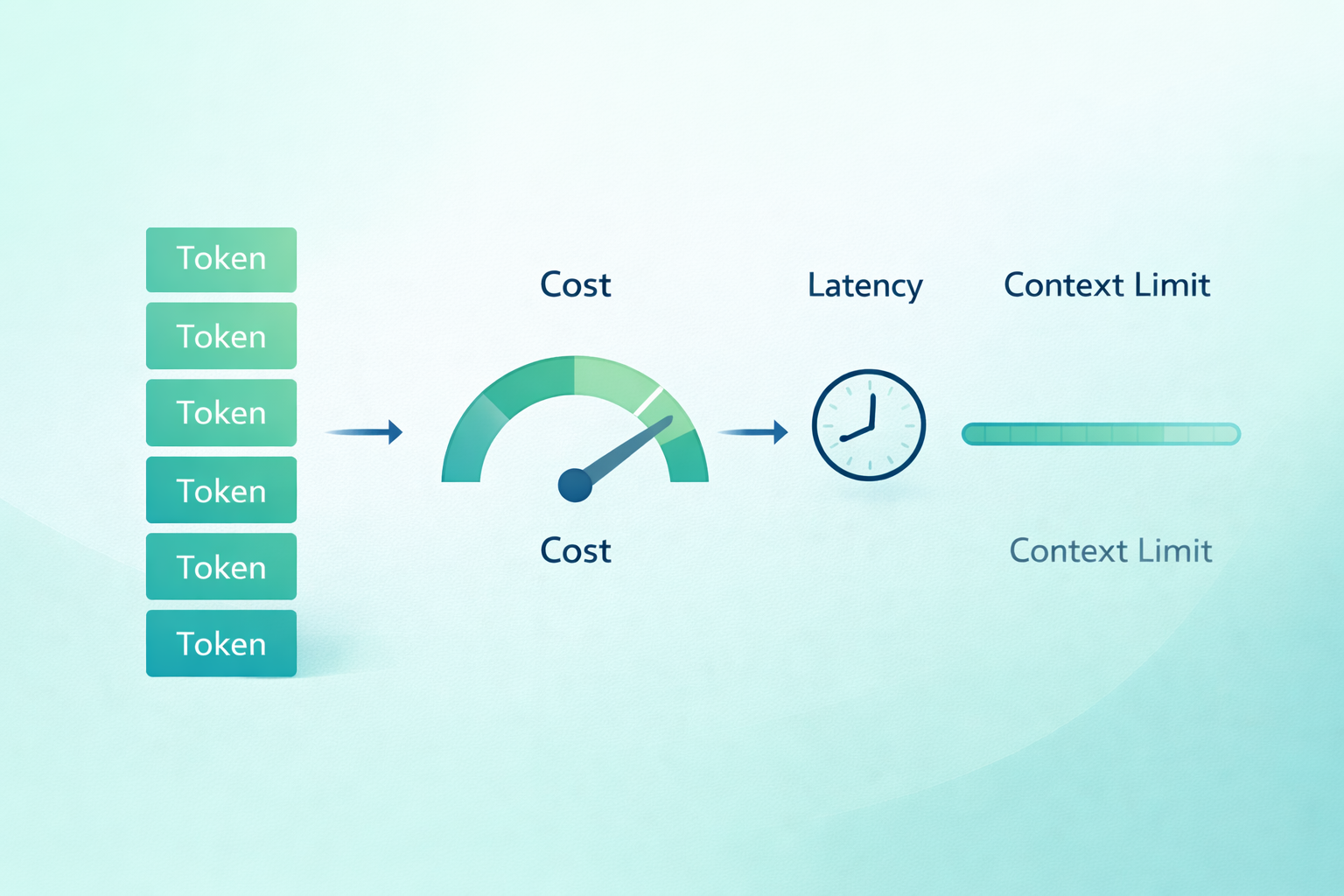

- Explains how LLM tokenization works and why it directly impacts AI cost, speed, and output quality.

- Provides practical insights for founders and CTOs building scalable AI-powered products.